The Bridge and Beyond: AI, AR Revolutionize Maritime Decision Making

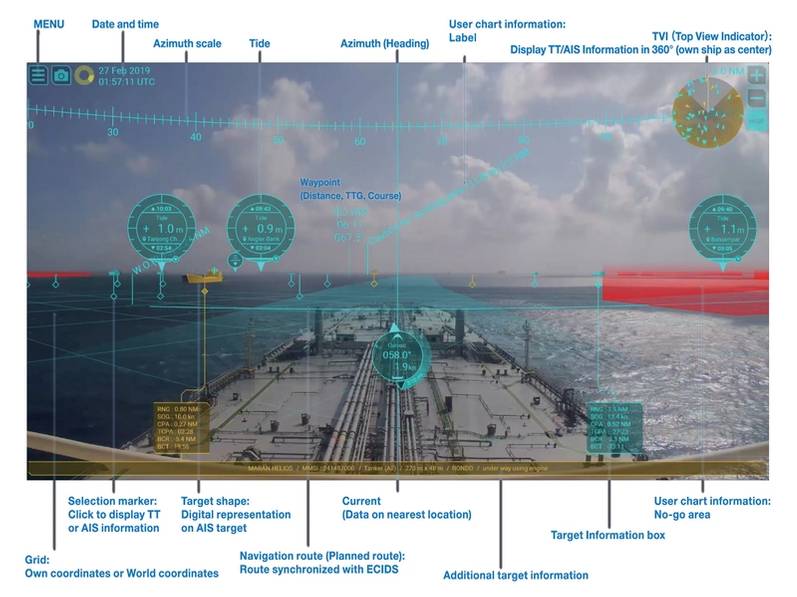

It’s already possible to have smart decision support on the bridge: With Furuno’s technology, live video imagery of the front view from the vessel has navigation information superimposed on it including heading, AIS data, radar target tracking, object identification, route waypoint and chart information.

SEA.AI’s bridge support system can identify larger vessels not fitted with AIS up to a range of 7.5 kilometers (nearly five miles), smaller vessels up to 3 kilometers (nearly 2 miles) away and flotsam up to 700 meters (nearly half a mile) away.

Augmenting a watchkeeper’s situational awareness with technology can reduce fatigue and help them make better decisions, earlier. But it’s not easy to augment the skills of an experienced watchkeeper. A lot of effort goes into building the knowledge base that supports the digital interpretation of information.

One of the most common inquiries that SEA.AI receives pertains to its system’s capability to detect semi-submerged containers. Detecting a floating container is mostly straightforward due to its larger size compared to buoys, its rigid rectangular structure and the temperature differential between the container and the surrounding water. However, any object can exhibit significant variation depending on viewing angle, distance, sea conditions, level of submersion, orientation in the water, time of day, weather conditions, sunlight intensity, spatial orientation and inclination, among other variables. As a result, to confidently identify an object, SEA.AI often requires input from hundreds of thousands of images.

Technology company Orca AI has costed out the benefits of using digital support to avoid sharp maneuvers and route deviations. One customer, Seaspan Corp, recorded a 19% reduction in close encounters and a 20% increase in minimum average distance from other vessels, leading to an estimated annual fuel saving of $100,000 per vessel using Orca AI’s navigation assistant.

Shipin Systems CEO Osher Perry claims operational results including a 42% reduction in incidents and a 17% increase in bridge manning compliance when its AI-based camera system is placed in core operational areas throughout a vessel. The system offers real-time risk detection including early detection of fires, an unmanned bridge and improper PPE use by integrating video data with ship systems including navigation, weather and machinery sensors. Some vessels have reported zero incidents within 180 days of deployment, while improved maintenance and early anomaly detection has reduced unplanned off-hire days by 30%.

Furuno is using AI to augment its systems which can already superimpose a graphical virtual shape over AIS targets such as buoys, boats and ships to provide details of their position in low visibility conditions. The company is now developing an automatic or assisted docking system.

Additionally, says Matt Wood, National Sales manager for Furuno USA Inc., the company has participated in several semi manned and autonomous voyages within Japan. “One of the likeliest scenarios in the near future is that the human crew on board vessels will be augmented by both machine learning and visualization of the vessel situation from a shore side facility.”

He continues: “We are at a stage of AR development right now in which many tools are being created, many of which are good. However, there is no standardization in these displays. We cannot and should not remove the mariner from the equation, but we need a way to present them with the best possible information in as easy to recognize a way as possible.”

Furuno is participating in the OpenBridge project led by the Oslo School of Architecture and Design, in partnership with a wide range of other companies including Kongsberg, Brunvoll and Vard. Together, they have developed a collection of tools and approaches to improve the design of bridges based on modern user interface technology and human-centered design principles. The aim is to avoid the fragmentation that comes with many different user interfaces on a bridge, increasing the need for training and also increasing the chances of human error.

-- Professor Kjetil Nordby, Oslo School of Architecture and Design

Image courtesy Kjetil Nordby

His focus on workplace design is extending to engine rooms, and most recently, with the OpenZero project, he is encompassing decarbonization technologies that boost energy efficiency and reduce fuel consumption. Partners for this project include ABB, GE Marine and DNV.

All these projects are designed to support decision-making by crews, but the systems being developed are also the building blocks for the safe navigation and management of autonomous ships. For this, it’s the decision-making of machines that needs to be augmented.

“Predicting pedestrians and other vehicles or vessels is one of the most funded research areas in autonomous navigation in land, air or maritime systems,” says Professor Lokukaluge Prasad Perera from the Arctic University of Norway. Perera is testing models for predicting ship behavior at long and close range using neural networks that can learn from extensive databases, such as those generated on training simulators, as well as from onboard sensor and AIS data. The aim is to enable safe decision-making on autonomous ships and to help crews understand the behavior of autonomous ships if they encounter them.

Perera’s team is working on a large-scale predictor that combines neural network learning with AIS data to predict up to 20 minutes of a ship’s trajectory. A local predictor is also being developed that combines ship kinematic models and neural network learning from onboard ship performance data to accurately predict the immediate 20 seconds of a ship’s trajectory.

“The local predictor is important for many close ship encounter situations to evaluate the possible collision risk. Therefore, both local and global scale predictors can help autonomous ships to detect possible collision situations and then take appropriate action at an early stage,” says Perera. “When systems are making decisions, these early predictions are extremely important.”