Assessing Skills in the Maritime Industry

Never easy, but always a critically important task.

It is critically important that we assess the ability of our mariners to perform the skills required to safely and efficiently do their jobs. It happens to also be very difficult to do so objectively and at the level of detail necessary to ensure safe operations and continuous improvement. This is especially true in dynamic, team-based scenarios such as drills and complicated safety-critical activities. This article covers a novel initiative by one of the world’s largest cruise lines and its partners to provide a solution to this problem.

Introduction

There is no arguing that the maritime industry is a highly skills-based environment. Not only must our mariners possess all the necessary knowledge to be able to perform efficiently and safely, but in addition they must be able to demonstrate the critical skills required of their position in a wide variety of conditions. As a result, a robust maritime training program will always consist of deep attention paid to the teaching and assessment of both knowledge and skills. But the question is, how effective are our current tools and best practice techniques at teaching and assessing knowledge and skills? The answer is “very effective” in some cases, and “not at all” in others. The “not at all” part is a problem.

We have said above that we need to teach and assess knowledge and stills. Let’s break this down. On the knowledge side, we are in good shape. There is a tremendous body of excellent research built over the decades on how to best impart and assess knowledge. As a computer science faculty member for 10 years at a large research university, this, in fact was my research area. The latest research on this has unequivocally shown that knowledge is best taught using the technique of blended learning; that which combines online learning and face-to-face training. Blended learning provides significantly better training and assessment outcomes than traditional face to face training, if done properly. As a result, the implementation of blended learning programs by vessel operators is accelerating.

But what about skills? There has been much less research on how to most effectively teach and assess skills. On the teaching side, the maritime industry has led in many ways, and has also followed the example of other industries by employing comprehensive hands-on training and repeated practice in both real and simulated environments. When this kind of skills training is preceded by on-line learning of the knowledge that underlies those skills, the outcome can be excellent. But how do we know for sure? How can we deeply and comprehensively assess the skills we teach? It is actually quite difficult. In fact the assessment of skills is easily the weakest link in the safety chain comprised of effective knowledge and skills training and assessment.

Current Practice in Skills Assessment

Most operators do assess skills to some degree. But while it is easy to be compliant using a weak approach to skills assessment, it is more difficult to know whether your officers and crew are competent. Although current practice does include some very good examples at well-run simulation and training centers, structured and standardized tools to assess skills at a fine-grained level are almost non-existent.

Current best practice typically involves having a trained assessor observe the performance of skills in a simulator or at a training center, and then make an assessment based on what he or she observes. To be effective, this requires a highly trained and experienced skills assessor using a standardized approach in controlled conditions. This approach is therefore rarely available to most operators, and even when it is, it is variable in quality and often somewhat subjective.

More commonly, skills assessment typically involves little more than an assessor recording who participated in an exercise and then perhaps adding a few high level comments as to how it went. This is common practice in skill demonstrations such as on-board drills (fire drills, life-boat drills, etc). This wastes a tremendous opportunity to assess competency and leaves operators exposed to a significant risk created by having very little knowledge as to the details of team and individual skill performance. If we don’t have deep insight as to how our individuals and teams are able to perform their skills in a variety of conditions, how can we hope to improve? How can we know we are operating safely and efficiently?

A Solution

It was this nagging question, above, that inspired the training experts at one of the world’s largest cruise lines to find a solution. Working together with Marine Learning Systems as their training technology partner, they developed and are realizing their vision of making comprehensive, objective and highly detailed skills assessments a reality. The vision and the technology to support it have evolved over time and involve input and significant expertise from a broad range of experts in safety training, human factors, simulation and maritime operations. Together these partners are joining the effort to finally create an accessible approach and electronic toolset for skills assessment.

The Skills Assessor App

The fundamental goal is to turn any demonstration of skill by teams or individuals into an opportunity for assessment. This can include on-board drills, simulation exercises and even real performance of dangerous duties like confined space entry and others. All of these are demonstrations of skills which can be assessed. All that is needed is a toolset to facilitate objective and comprehensive real-time assessment. That is the goal of the “Skills Assessor.”

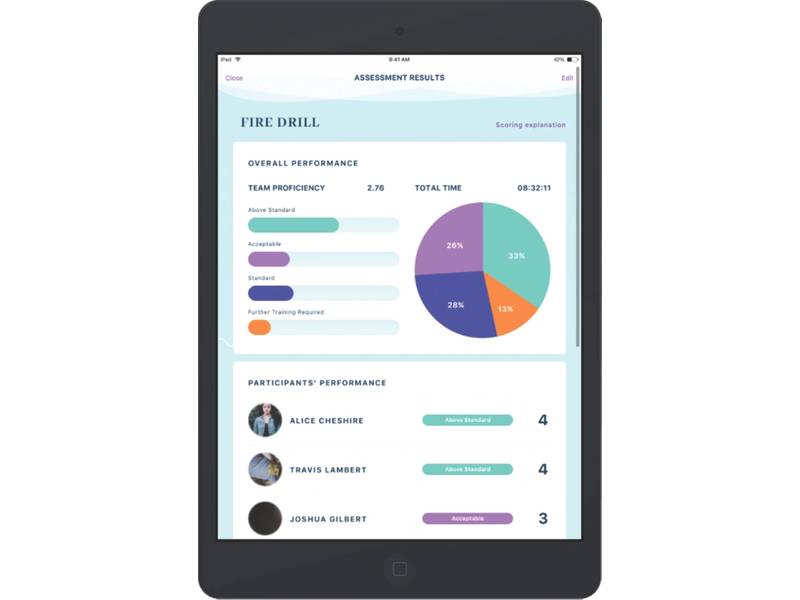

The skills assessor is an app that runs on a tablet and is linked into the Marine Learning System’s LMS (learning management system). The app presents a simple structured assessment framework or “form” that corresponds to the skills being assessed. The form essentially lists a set of possible actions that are appropriate for the skill being demonstrated. As the mariners perform their duties or demonstrate their skills, the assessor will indicate which of the actions were actually observed for each participant as they are observed.

For example, let’s say we are assessing a team practicing a collision scenario in a simulator. The form in the skills assessor app will display a set of actions consistent with the activity for each participant. The role of the assessor is to mark each action when (and if) it is observed. For example, initial actions in a collision exercise might include calling out the event, adjusting propulsion, notifying the master, creating an incident log, and so on. The form can also indicate other performance requirements such as speaking in a loud and clear voice, etc.

As the scenario unfolds, the assessor will indicate with a single click each action (or “performance indicator”) that was observed and who performed it. The performance indicators are intentionally simple so as to remove any subjectivity on the part of the assessor, and to allow rapid assessment in a fast-paced, fluid situation. At the end of the scenario, a report will be generated which provides remarkable detail on how the team performed as a whole, and how each individual contributed to that performance.

Actionable Data

Despite the fact that the performance indicators being recorded are intentionally very simple discrete actions, the depth of insight that can be derived is substantial. At the most basic level, the report will indicate whether the scenario was concluded successfully. It will show whether any critical actions were missed by the team or by an individual. It can reveal the timing of team member actions against scenario events in order to provide insight about individual and team reaction time and efficiency. Data from the skill assessor could be used to determine who among the team tended to lead and who tended to follow, those who contributed more, and those who contributed less.

Attributes such as assertiveness, leadership, hesitancy, and others can be derived. The difference between failure, success and mastery can be accurately determined, broken down by individual skill. A wealth of useful and actionable data is derived, telling us what we are doing well, and where the gaps are. And deeper analysis of the data will even reveal how factors like team composition, training history, and other influences can affect and be optimized to improve performance. The full utility of the data can only be imagined at this point.

Using the skills assessor, the cruise line will have the ability to gather skill performance information at any time from a huge variety of daily activities, creating an increasingly valuable database to be mined for insights. It can be used to assess current readiness, track the development of individual skills and employee attributes over time, compare performance across teams and vessels, and act as a rich source of data to inform a continuous improvement program. It will finally shine a light on one of the most important black holes in determining officer and crew core competence – skill proficiency.

Next Steps

The skill assessor has been used in prototype form at a large maritime simulation training center for over a year, and the first release of the full version is due in January of 2018. Even though this development has been largely based on the insights and expertise of those at the cruise line and their partners, the skills assessor will be available to the industry at large in the hope that the safety of all can benefit.

The Author

Murray Goldberg is CEO of Marine Learning Systems (www.MarineLS.com). An eLearning researcher and developer, his software has been used by 14 million people worldwide.

(As published in the January 2018 edition of Marine News)